Spark Shell Commands

Updated:05/20/2021 by Computer Hope

This tutorial provides a quick introduction to using Spark. We will first introduce the API through Spark’s interactive shell (in Python or Scala), then show how to write applications in Java, Scala, and Python.

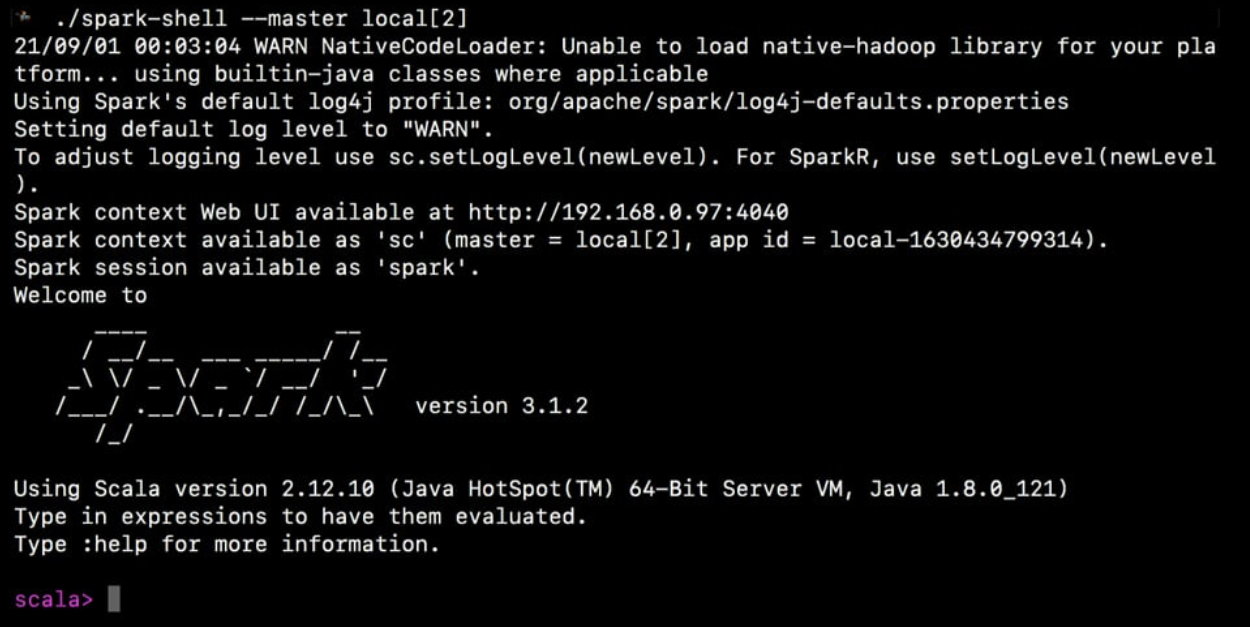

Using Spark shell

You start Spark shell using spark-shell script

When you execute spark-shell you actually execute Spark submit as follows:

org.apache.spark.deploy.SparkSubmit --class org.apache.spark.repl.Main --name Spark shell spark-shell

Spark shell creates an instance of SparkSession under the name spark for you (so you don’t have to know the details how to do it yourself on day 1).

scala> :type spark

org.apache.spark.sql.SparkSession

Besides, there is also sc value created which is an instance of SparkContext.

scala> :type sc

org.apache.spark.SparkContext

Below is screenshot of out put of Word Count program in Apache Spark

This code is runing runing spark shell