Spark with scala Word Count First Example for beginners

Updated:01/20/2021 by Computer Hope

- In this spark scala tutorial you will learn-

- Steps to install spark

- Deploy your own Spark cluster in standalone mode.

- Running your first spark program : Spark word count application.

Below is the source code for the Word Count program in Apache Spark -

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark._

object SparkWordCount {

def main(args: Array[String]) {

val sc = new SparkContext( "local", "Word Count", "/usr/local/spark", Nil, Map(), Map())

val input = sc.textFile("input.txt")

val count = input.flatMap(line ⇒ line.split(" "))

.map(word ⇒ (word, 1))

.reduceByKey(_ + _)

count.saveAsTextFile("outfile")

System.out.println("OK");

}

}

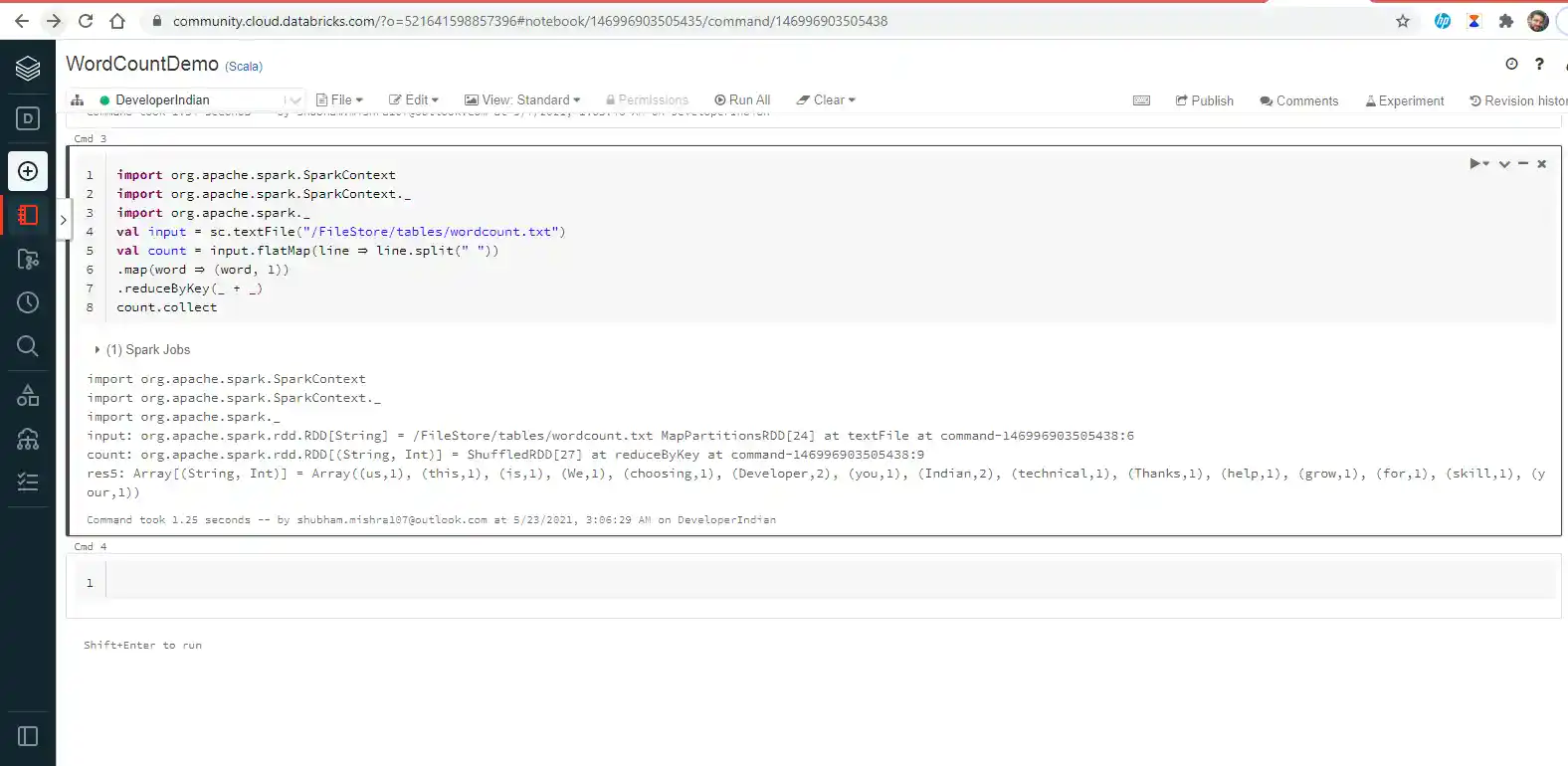

Below is screenshot of out put of Word Count program in Apache Spark

word count program in spark scala code is runing on Databricks NoteBook